Jazz Performer Identification Model

Run the model on your own tracks online!

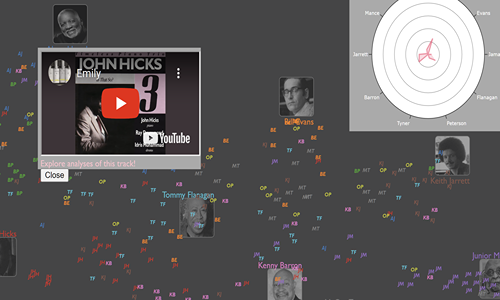

Explore the model predictions interactively!

Great musicians and artists have a unique style that is recognisable as ‘theirs’; arguably, this is what makes them great! But, while the differences in style between any two performers are often evident intuitively, they can be hard to describe in words. We developed an automated pipeline that can identify the piano player on a jazz recording with a degree of accuracy over five times better than chance alone.

Using deep-learning assisted signal processing, the pipeline works directly on an audio recording and extracts a suite of musical features that constitute its stylistic fingerprint. The pipeline is trained on the Cambridge Jazz Trio Database, a dataset of approximately 16 hours of commercial recordings with onset and beat annotations. The pipeline has applications to music engineering (recommendation and classification algorithms), education (providing feedback to performers) and broader science (joint action, forensic stylometry). Our work showcases how machine learning can bridge the gap between intuition and quantifiable analysis of art, presenting a paradigm applicable across many domains.