Music Performance Manipulation Software

One of the difficulties associated with studying music performances in experiments is the difficulty in ensuring consistency and repeatablity between several performances. In this project, we developed a software platform that allows the researcher to manipulate audio and video feedback given to performers in a real time and controllable manner during an experiment.

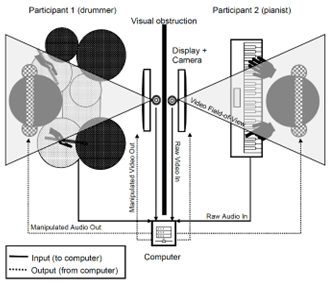

The software looks similar to a conference call made on a telecommunication platform such as Zoom or Skype, albeit one where all the participants are together in the same room. Two or more musicians are seated opposite to each other, with direct visual contact between them prevented through the use of a physical barrier. Instead, they communicate through a closed-circuit audiovisual system, consisting of a webcam, monitor, headphones, and musical instrument, each connected to an external computer running our software. Participants in our experiments hear each other’s performance using headphones, with visual feeds from their partner’s webcam displayed on a monitor in front of them.

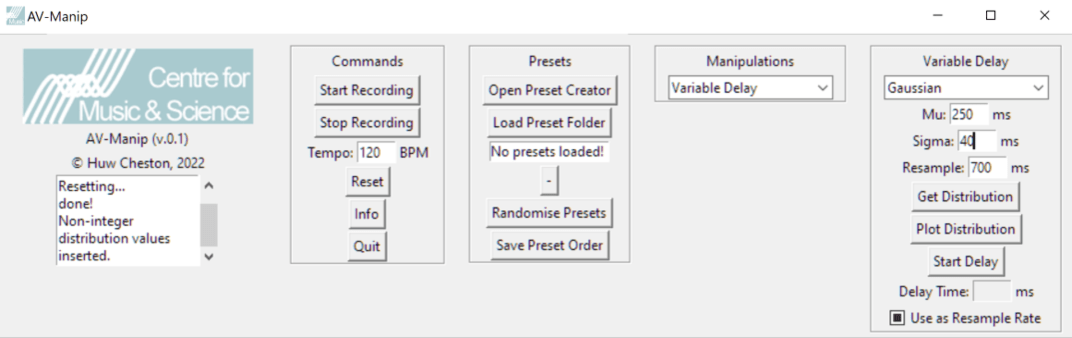

At any point during a performance, the researcher may use our software to introduce a manipulation into these feeds. Manipulations currently implemented in the software include the addition of delay (or latency, including latency with variable and random times), echo, pitches (e.g., artificial ‘wrong notes’), and automatic face and body occlusion; however, the platform is designed to be extendable and can interface with any existing audio or MIDI effect in the standard VST format.

The software also provides a straightforward way to capture synchronised audio and video feeds (both manipulated and non-manipulated) from multiple sources by integrating with the open source OpenCV (for video) and REAPER (for audio), eliminating the need to manually align audio and video post-experiment. The user can also save and recall preset configurations for particular experiments and conditions using a simple GUI.

The development of this software was supported by a Project Incubation award from Cambridge Digital Humanities. You can read the project page here.

Read a recent paper created using the software!